Updated: sphinx setup wasn’t exactly ‘out of the box’. Sphinx searches the fastest now and its relevancy increased (charts updated below).

Motivation

Later this month we will be presenting a half day tutorial on Open Search at SIGIR. It’ll basically focus on how to use open source software and cloud services for building and quickly prototyping advanced search applications. Open Search isn’t just about building a Google-like search box on a free technology stack, but encouraging the community to extend and embrace search technology to improve the relevance of any application.

For example, one non-search application of BOSS leveraged the Spelling service to spell correct video comments before handing them off to their Spam filter. The Spelling correction process normalizes popular words that spammers intentionally misspell to get around spam models that rely on term statistics, and thus, can increase spam detection accuracy.

We have split up our upcoming talk into two sections:

- Services: Open Search Web APIs (Yahoo! BOSS, Twitter, Bing, and Google AJAX Search), interesting mashup examples, ranking models and academic research that leverage or could benefit from such services.

- Software: How to use popular open source packages for vertical indexing your own data.

While researching for the Software section, I was quite surprised by the number of open source vertical search solutions I found:

- Lucene (Nutch, Solr, Hounder), Sphinx, zettair, Terrier, Galago, Minnion, MG4J, Wumpus, RDBMS (mysql, sqlite), Indri, Xapian, grep …

And I was even more surprised by the lack of comparisons between these solutions. Many of these platforms advertise their performance benchmarks, but they are in isolation, use different data sets, and seem to be more focused on speed as opposed to say relevance.

The best paper I could find that compared performance and relevance of many open source search engines was Middleton+Baeza’07, but the paper is quite old now and didn’t make its source code and data sets publicly available.

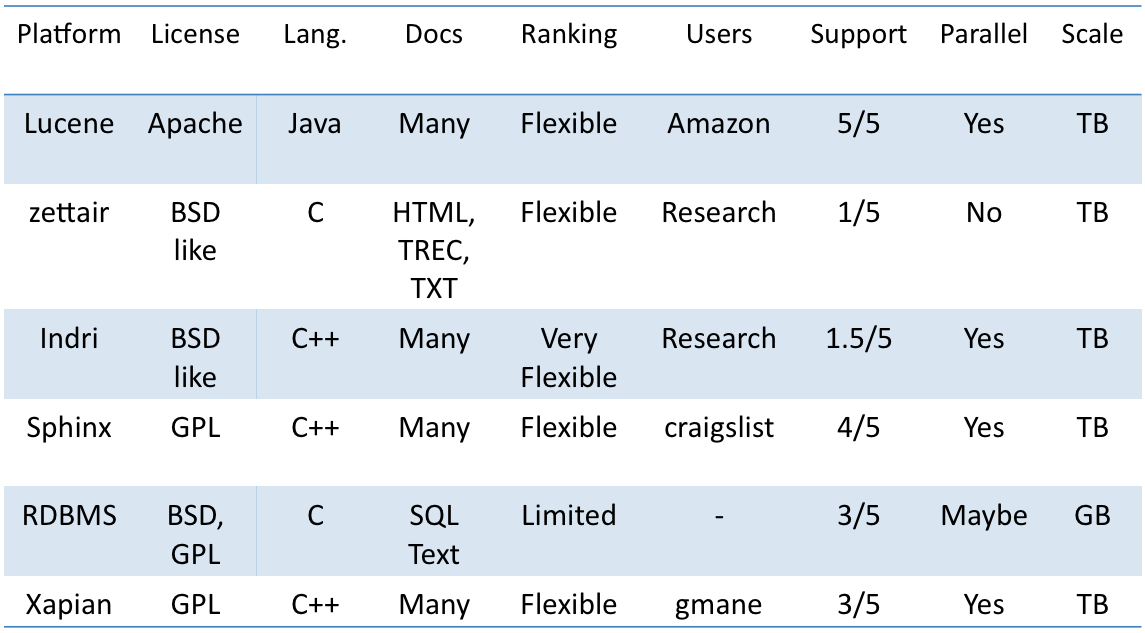

So, I developed a couple of fun, off the wall experiments to test (for building code examples – this is just a simple/quick evaluation and not for SIGIR – read disclaimer in the conclusion section) some of the popular vertical indexing solutions. Here’s a table of the platforms I selected to study, with some high level feature breakdowns:

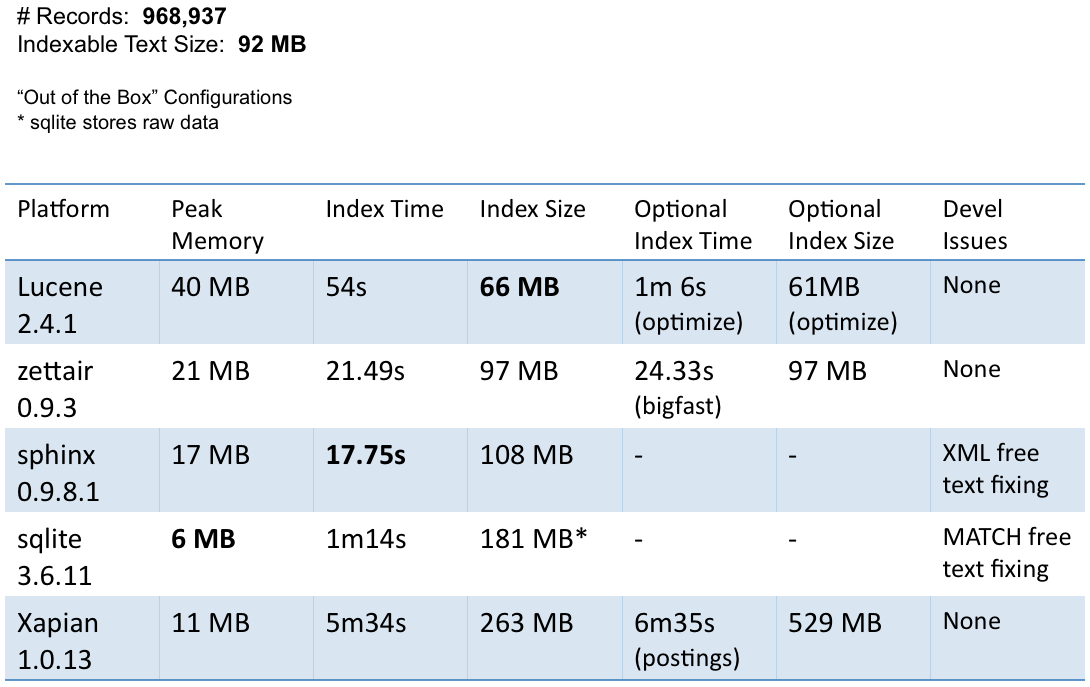

One key design decision I made was not to change any numerical tuning parameters. I really wanted to test “Out of the Box” performance to simulate the common developer scenario. Plus, it takes forever to optimize parameters fairly across multiple platforms and different data sets esp. for an over-the-weekend benchmark (see disclaimer in the Conclusion section).

Also, I tried my best to write each experiment natively for each platform using the expected library routines or binary commands.

Twitter Experiment

For the first experiment, I wanted to see how well these platforms index Twitter data. Twitter is becoming very mainstream, and its real time nature and brevity differs greatly from traditional web content (which these search platforms are overall more tailored for) so its data should make for some interesting experiments.

So I proceeded to crawl Twitter to generate a sample data set. After about a full day and night, I had downloaded ~1M tweets (~10/second).

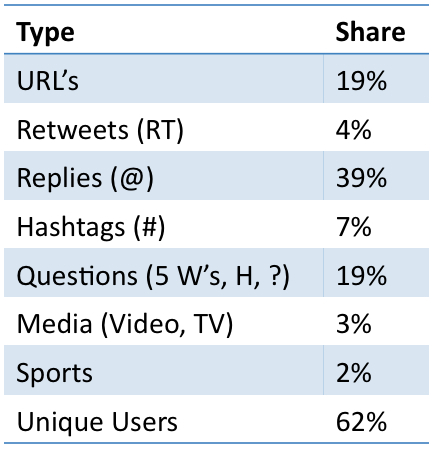

But before indexing, I did some quick analysis of my acquired Twitter data set:

# of Tweets: 968,937

Indexable Text Size (user, name, text message): 92MB

Average Tweet Size: 12 words

Types of Tweets based on simple word filters:

Very interesting stats here – especially the high percentage of tweets that seem to be asking questions. Could Twitter (or an application) better serve this need?

Here’s a table comparing the indexing performance over this Twitter data set across the select vertical search solutions:

Lucene was the only solution that produced an index that was smaller than the input data size. Shaves an additional 5 megabytes if one runs it in optimize mode, but at the consequence of adding another ten seconds to indexing. sphinx and zettair index the fastest. Interestingly, I ran zettair in big-and-fast mode (which sucks up 300+ megabytes of RAM) but it ran slower by 3 seconds (maybe because of the nature of tweets). Xapian ran 5x slower than sqlite (which stores the raw input data in addition to the index) and produced the largest index file sizes. The default index_text method in Xapian stores positional information, which blew the index size to 529 megabytes. One must use index_text_without_positions to make the size more reasonable. I checked my Xapian code against the examples and documentation to see if I was doing something wrong, but I couldn’t find any discrepancies. I also included a column about development issues I encountered. zettair was by far the easiest to use (simple command line) but required transforming the input data into a new format. I had some text issues with sqlite (also needs to be recompiled with FTS3 enabled) and sphinx given their strict input constraints. sphinx also requires a conf file which took some searching to find full examples of. Lucene, zettair, and Xapian were the most forgiving when it came to accepting text inputs (zero errors).

Measuring Relevancy: Medical Data Set

While this is a fun performance experiment for indexing short text, this test does not measure search performance and relevancy.

To measure relevancy, we need judgment data that tells us how relevant a document result is to a query. The best data set I could find that was publicly available for download (almost all of them require mailing in CD’s) was from the TREC-9 Filtering track, which provides a collection of 196,403 medical journal references – totaling ~300MB of indexable text (titles, authors, abstracts, keywords) with an average of 215 tokens per record. More importantly, this data set provides judgment data for 63 query-like tasks in the form of “<task, document, 2|1|0 rating>” (2 is very relevant, 1 is somewhat relevant, 0 is not rated). An example task is “37 yr old man with sickle cell disease.” To turn this into a search benchmark, I treat these tasks as OR’ed queries. To measure relevancy, I compute the Average DCG across the 63 queries for results in positions 1-10.

With this larger data set (3x larger than the Twitter one), we see zettair’s indexing performance improve (makes sense as it’s more designed for larger corpora); zettair’s search speed should probably be a bit faster because its search command line utility prints some unnecessary stats. For multi-searching in sphinx, I developed a Java client (with the hopes of making it competitive with Lucene – the one to beat) which connects to the sphinx searchd server via a socket (that’s their API model in the examples). sphinx returned searches the fastest – ~3x faster than Lucene. Its indexing time was also on par with zettair. Lucene obtained the highest relevance and smallest index size. The index time could probably be improved by fiddling with its merge parameters, but I wanted to avoid numerical adjustments in this evaluation. Xapian has very similar search performance to Lucene but with significant indexing costs (both time and space > 3x). sqlite has the worst relevance because it doesn’t sort by relevance nor seem to provide an ORDER BY function to do so.

Conclusion & Downloads

Based on these preliminary results and anecdotal information I’ve collected from the web and people in the field (with more emphasis on the latter), I would probably recommend Lucene (which is an IR library – use a wrapper platform like Solr w/ Nutch if you need all the search dressings like snippets, crawlers, servlets) for many vertical search indexing applications – especially if you need something that runs decently well out of the box (as that’s what I’m mainly evaluating here) and community support.

Keep in mind that these experiments are still very early (done on a weekend budget) and can/should be improved greatly with bigger and better data sets, tuned implementations, and community support (I’d be the first one to say these are far from perfect, so I open sourced my code below). It’s pretty hard to make a benchmark that everybody likes (especially in this space where there haven’t really been many … and I’m starting to see why :)), not necessarily because there are always winners/losers and biases in benchmarks, but because there are so many different types of data sets and platform APIs and tuning parameters (at least databases support SQL!). This is just a start. I see this as a very evolutionary project that requires community support to get it right. Take the results here for what it’s worth and still run your own tuned benchmarks.

To encourage further search development and benchmarks, I’ve open sourced all the code here:

http://github.com/zooie/opensearch/tree/master

Happy to post any new and interesting results.

There is another brand new greate open source search engine FASTCAT!!

It’s fast, reliable and java-based.

It you have a jdk1.5+ in your environment, you can start a search server by a just one click.

Check it out!

http://code.google.com/p/google-fastcat/

Very interesting this post, i actually use ferret (the ruby version of lucene), but i’m thinking to move to sphinx, because the plugin i use for ferret it’s unmaintained (acts_as_ferret), the alternative would be solr (acts_as_solr, which i don’t know how good works with ruby).

My big worry is the scale limit (just 100gb for sphinx indexes? or have i misunderstood it?) and that lucene is way slower than sphinx for the indexing.

Do you think is better to reindex all the data every night ? (now ferret is doing updates automatically, but with sphinx it would need a full index sometimes)

@XYZ, the article IMO is wrong as to Sphinx scale. There are no hard limits as to index size, and the biggest cluster as I mentioned indexes over 2 TB (and over 2 billion documents BTW).

@Lee Fyock, you can use kill-lists with Sphinx to delete records. This is actually pretty much was Lucene/Solr do inside. Except we’re exposing these guts 🙂

@Vik, chances are that indexes that do *not* fit in RAM will show somewhat different performance. Anyway, gotta craft my own benchmark that wouldn’t compare apples to oranges in Sphinx case. For one, I recall one that compared Sphinx with stopwords and Lucene without stopwords and concluded that Sphinx is so much slower… 😉

@Andrew

Thanks. As mentioned below, I referenced the scale from the sphinx page but I’ll update it to terabytes.

Please contribute your benchmark to the git linked above if you can.

An interesting start for comparison.

I would’ve picked different research engines: Indri and Terrier. These are two of the most widely used research platforms; more so than Zettair or Xapian.

If you get the opportunity, I would encourage you to give those a try.

One thing I would like to see, is how the different search engines deal with updates. Can you easily add/modify search entries, do you have to deal with delta indexes or can you only re-index the whole search.

Interesting comparison. One thing caught my eye: where does the 100 GB index size for Sphinx come from? For example, here’s a reference from 2007:

“For example on BoardReader we’re using this combination to build search against over 1 billion of forum posts totaling over 1.5TB of data”

Where “this combination” stands for Sphinx + MySQL. I imagine it only went up in the two years since then.

Hello,

I’ve looked mainly at pictures because right now this topic in not “hot” for me.

But quite a while ago I was researching this and found and tested mnoGoSearch.

Any plan to compare also this one?

This is very useful article and draws similar conclusions to what we observed. We’ve used Solr/Lucene for building CiteSeerX. CiteSeerX indexes nearly 1.5 M documents and is growing weekly. Lucene works reasonably well and has many features for information extraction plugins that we find quite useful. The open source code for CiteSeerX is available as SeerSuite on SourceForge.

We find more actual applications and researchers using Lucene than Indri and Terrier. There also seems to be more industrial and government support for Solr/Lucene. However, it would be good to make a comparison with both of these.

To be technically correct, this is not a comparison of open source search engines but open source indexers. Crawlers do not come with Lucene and some of the others.

We have a project to integrate Heritrix through middleware to Solr/Lucene to make a true open source search engine. The code will soon be released on our SeerSuite site. Crawling can be a crucial part of any of these systems. Any comments would be appreciated.

Lee Giles

I agree this is a very useful search article and we found roughly similar results with our data

Interesting comparison.

One question that I hoped to find is on the subject of scalability.

– Can these systems handle incremental updates?

Assume you have ~1M tweets and I download another 10.

Can I simply ‘add’ these 10 or do I need to reindex ‘the whole thing’?

We’ve been using acts_as_solr for a couple of years, and are thinking of switching to Sphinx, mainly because re-indexing our dataset takes a few hours with solr, and around 5 minutes with Sphinx.

Both acts_as_solr and thinking_sphinx are decent Rails plugins that are pretty easy to get going.

With acts_as_solr, you can send updates for individual records to be reindexed. Sphinx has a delta index which you can periodically merge into the main index. I prefer the acts_as_solr method, since record deletions can be pushed into the index; the thinking_sphinx method means you have to re-index the entire dataset if records are deleted. Unless I’m wrong. The good news is that reindexing is super-fast with Sphinx.

>>and that lucene is way slower than sphinx for the indexing.

I wouldnt take that away from this benchmark. There are a lot of variables going on. Depending on the different tokenization and filter options for each engine, a comparison is not easy to judge from simple tests. If you use the StandardAnalyzer in Lucene, there is a lot of extra processing going on. There is no real default Analyzer in Lucene, so its hard to say what out of the box is. Another search engine might do very simple analysis by default – but using a simpler Analyzer can be *many* times faster. Also, which engines are storing positional info, and which not? Lucene will by default, but you can turn it off. Also, a C program can access as much RAM as available, while a Java program will be limited by whats given it as a command line parameter. There are so many variables between the engines, these types of benchmarks are only of limited usefulness. The cited previous paper from 07 suffer from these types of issues heavily as well.

Out of the box settings are not usually very comparable, and tuning each is very difficult as a writer would have a difficult time learning each engine to that detail. The result is, these types of comparisons are of use, but its very limited use. Heavy grain of salt.

You should get faster indexing throughput from Xapian, and a probably a smaller final index too, if you raise the threshold at which it automatically flushes a batch of documents to disk. Currently this defaults to 10000, which is rather conservative for modern server hardware. I don’t see a spec for the hardware, but you can probably set it to 1000000 (a million) comfortably and index all the documents in one go in each case.

Just set XAPIAN_FLUSH_THRESHOLD in the environment (and remember to export it!) before running your indexer – e.g. with bash:

export XAPIAN_FLUSH_THRESHOLD=1000000

I realise this is tweaking a numeric parameter, but then you said you tried “optimize mode” for lucene and “bigfast mode” for zettair – from the description, this is much like the latter – trading memory usage for speed.

Lucene and Solr have pretty robust commercial support and services available, with expert best practices consulting and SLA-based support — see http://www.lucidimagination.com.

The problem with reading too much into these bakeoffs is the tuning. And the scale — these are still tiny datasets — try all of MEDLINE or all of Wikipedia or the terabyte TREC data sets.

I’ve only had experience with Lucene, but it’s very flexible on settings of when to merge indexes, which can trade off memory usage for indexing speed. Given Lucene’s merge model, it’s also easy to shard out the index over multiple threads/process and the merge it into a final index or just continue searching in sharded form.

Which version of Java and which settings can make a big difference. Setting the -server command-line option on the on 32-bit Java versions and running with concurrent garbage collection (assuming you have at least two spare threads), can make a huge difference in this kind of long-running (e.g. minutes, not seconds), loop-intensive process. But the server JVM takes longer to fire up.

Mark’s comment above is also spot-on. Tokenization can take up lots of time, and there are huge differences across different tokenizers. Lucene’s StandardAnalyzer also does stoplisting and case normalization, which both reduce index size.

Is the exact corpus you tested against available for download? I’d quite like to try another engine against it. (One I wrote years ago actually, please contact me offline). Cheers.

Very cool. I’ve been wanting to see an attempt at Lucene vs. Sphinx for a while now.

Please do not forget about http://www.egothor.org/

Hi Vik:

Would it be possible to open up your benchmark setup/data? We have been developing a realtime indexing/search solution (open sourced: Zoie) on top of lucene where we made many customizations to lucene for this scenario. It would be awesome if we can measure how we are doing.

Thanks

-John

Your tweet example with Xapian is bunk. As pointed out you are using the default conservatively tuned flush parameter for tweets. Your data says the average tweet length is 12 words, this is not a reasonable document size for a document database and search engine like Xapian to be shipped out of the box to accommodate. Wikipedia says their average document size is 435 words, meaning you are flushing 36 times more often in your example then if you picked a different dataset, one that is arguably much more appropriate for benchmarking text indexers. Books and larger documents may contain tens of thousands of words.

Try increasing your flush threshold, as has been suggested to you already, by 10x, and then 100x. After all this slashdotting and hoopla you created with your blog post, it would be constructive and responsible of you to take this suggestion, and all the other made here pointing out the weakness of your micro-benchmark, and post more reasonably tuned numbers.

Wouldnt it be ezier, more constructive to just test this? the code is open..

Quite obvious youre an anonymous from xapian BTW 😉

i applaud the idea, work, process. author made dislcaimers it was a quick benchmark to get the ball going and that he did.

@xyz, @figvam

I found the 100GB figure from this page:

http://sphinxsearch.com/about.html

Albeit, it assumes a single CPU; distributing should theoretically increase the scale and I’m sure that’s what craigslist does

Best way to figure out the best indexing scheme for your app is to simply benchmark it

@jeffdalton

Totally agree

Indri and Terrier should be tested as well

The code is open. Anyone want to contribute?

@Lee

Thanks for your additional insight

“Search Engines” just sounds better in the title 🙂

@Mark, @Olly, @Bob, @MP

I understand that “Out of the Box” configurations on each of these platforms use different tokenizers, numerically tuned settings, ranking functions, etc.

However, for simplicity, and for a first evaluation, it seemed worthwhile to test “Out of the Box” configurations. That’s also how many developers end up using these platforms and so I felt it was important to reward solutions that provide good general default settings.

Also, I intentionally experimented with Twitter data because I knew these platforms weren’t well designed for this input type; I wanted to see how well they do though anyway – how adaptive and general are these platforms for new popular data sources like tweets?

Also, the Medical data has a much larger text size average (> 200 words) so it’s closer to a normal document size

But I agree bigger data sets with traditional web like documents should be tested as well

Additionally, realize that I’m benchmarking all these platforms against the same data set. If Xapian suffers significantly from the FLUSH_THRESHOLD, then why doesn’t Lucene or sphinx exhibit the same behavior? You’re probably right that the FLUSH_THRESHOLD is holding Xapian back in these tests (and when time permits I’ll test it), but why is it more sensitive to this setting than these other platforms? What I’m trying to get at is maybe the default setting should be changed, or maybe it should be more adaptive. Regardless, I still think it’s important that out of the box settings be competitive given most developers are complacent to them.

I tried really hard to avoid numerical changes for this first evaluation (albeit I did try big-and-fast for zettair and optimize for lucene on just the Twitter set because they were more flags as opposed to numerical changes) for the reasons I cited earlier: It takes a lot of time and quite a bit of tribal wisdom to optimize just a single platform, but then you have to optimize each platform (because now you have to be fair – which even with tuning is hard to guarantee), and tuning parameters can vary quite a bit depending on hardware specs and the type of input data set. Plus, I was lazy 🙂

I could have used different, bigger TREC data sets but it would have taken time to acquire (have to pay and mail in CD’s – I was doing this on a weekend budget and mainly to generate code examples for the popular platforms) and they wouldn’t be publicly available for others to repeat. The OHSUMED data set was the only free/public one I could find online that had judgments which are critical for determining relevancy.

In the future I definitely want to see how well these platforms perform with different data sets and tuned implementations. The source code is all available so please feel free to go wild and I’ll post any new and interesting results you guys send my way.

Also, I didn’t meant to be slashdotted 🙂 Really appreciate the blog love though. Tried my best to make these benchmarks useful, but let’s work together to make them bigger and better. I see this work as being very evolutionary. Keep in mind that it’s pretty hard to make a benchmark that everyone likes (especially in this space where there haven’t really been any at all … and I’m starting to see why 🙂 ), not necessarily because there are always winners/losers and biases in benchmarks, but because there are so many different types of data sets and platform APIs and tuning parameters (at least databases support SQL). This is just the beginning hopefully. Take the results here for what it’s worth – I do hear it’s consistent with several other developers’ results.

Really appreciate your feedback.

>>However, I really wanted to test “Out of the Box” configurations because that’s how many >>developers end up using these platforms and I felt it was important to reward solutions >>that provide good general default settings

That’s a bit dangerous. A full text search solution still needs some configuration for its data set and use unfortunately. Out-of-the-box is very important, but you can only do so much. There is a lot of tradeoff. It makes comparisons almost impossible. If you reward out of the box while ignoring everything else, I would change my search engine to whitespace tokenize with no other processing. I wouldn’t rank or sort or facet or lowercase, or store positions, or offsets, or do much of anything. There are all kinds of quality tradeoffs that can be made. You may be rewording bad tradeoffs – performance for these skin deep, out-of-the-box comparisons in trade for a better user experience.

I think you wrote a great and interesting post. I just think it needs to be looked at it for what its worth. And I don’t mean that negatively at all. This is a great start, and I think a step above the cited paper. Giving the subject its proper due would certainly be a Herculean effort over so many engines. Heck, just this must have been a fairly good amount of work – I wouldn’t have undertaken it myself 😉

@ Mark

I agree that tuned implementations that attempt to truly equalize the playing field should definitely be tested (more benchmarks the better :)). But as you mention that would be a lot of work and it varies with different data sets and hardware. I think there’s still a lot you can take away based on how these platforms work out of the box, especially with two different data sets. Lucene’s consistency is quite promising and that’s great for developers who can tune to “optimize” as opposed to tune to get it just “working”. But agreed, more needs to be done before a ‘final winner’ is chosen per say.

It is true you can easily optimize your platform to do indexing faster (partial indexing, crappy tokenization) with the tricks you mention, but that’s why it’s important to look at the combination of key dimensions as analyzed in these experiments (relevance, search speed, indexing time, memory) and weight the ones that are most important to your application. You might make indexing unfairly fast but the relevance scores will most likely drop.

But the code is all open source so feel free to send me results of different implementations and I’d be happy to post them if they make sense. I definitely see this work as a starting point for more evaluations.

BTW, one way to scale this experiment without having to overdo tuning for each platform is to run each system over many, many different types of data sets in an attempt to normalize the settings and discover relative patterns of performance.

Really appreciate the feedback.

So, how did you calculate the “support” scores?

@ Anonymous

Those are totally (and hopefully the only) subjective scores listed in these experiments. I basically talked to people and examined community sizes. Please feel free to flame on those.

Great writeup! I’d love to see CouchDB benchmarked using its pure-Javascript “view” system. The view system might be a good scaling factor considering it works by mapping/reducing across any number of database partitions. I have a feeling that inserts and reads will be pretty slow though. Would be interesting nonetheless. 🙂

Vik, thanks for the effort. I won’t repeat what others, especially Mark (I’m guessing that’s Mr. MM there, right Mark?) and Bob said.

Sematext (click on my name) deals with Lucene and Solr performance on a daily basis.

Ah, let me just look at the Lucene code. Lucene is written in Java. I see you didn’t use the -Xmx JVM argument. This is not really “Lucene out of the box”, but more “JVM out of the box”.

Look at this article I wrote 6+ years ago:

http://www.onjava.com/pub/a/onjava/2003/03/05/lucene.html

There are a number of clear and concise examples of how Lucene behaves in terms of indexing speed depending on various parameters.

If you want to try Lucene again, here are 4 simple things you should do:

– Use appropriate -Xmx

– Use WhitespaceAnalyzer

– Call setRAMBufferSizeMB with appropriate number on the IndexWriter before indexing

– Create several warm-up loops to warm up the JVM before measuring

Can you post the twitter file that you used for testing. I didn’t see it in the git repository. I would like to run it against our log search index (which is optimized for this type of data) so I can compare the results.

@Otis

Thanks for the feedback. Tried to avoid optional flags and numerical tuning on purpose in this simple benchmark (takes a bit of work to tune each) but will definitely keep those in mind for future evaluations. Also, it’s open source, so feel free to contribute as well.

@Chris

I’m checking with Twitter right now to see if I can legally post up my data set. The OHSUMED data set is available though (linked in this post and in the README).

@Otis

I definitely agree I should give the JVM time to warm up before measuring.

I’ll try the WhitespaceAnalyzer when cycles permit. Mainly used StandardAnalyzer because it seemed more ubiquitous in the examples I read (and I was really trying to use expected library routines to simulate the common developer case).

Best

I always use open source search engine…

Thanks for the stats about Twitter, guess it’s not a bad thing then that my site has a few Twitter accounts sending feeds similar to RSS 🙂

Here’s a nice thought for a benchmark using Wikipedia data: http://mysql-full-text.blogspot.com/2007/10/ram-is-1000-times-faster-than-disk.html. This article is quite outdated, but the idea is good. Maybe you could think of trying it out.

Can you say, bias? This company builds on top of Xapian 🙂

Hi Vik. Cool that you wrote about open source search engines. I am also interested in this topic. Did you know that I even found your blog via Google search for “open source search engines”? Just thought I would pass that info along to you. cheers!

Regarding Twitter stats and the large proportion of questions — yes, the percentage is large. Note that the W’s and the H’s are English-specific, so the real percentage is much higher.

Maybe search for the “?” (but outside of URLs)

@Vik – well, there really is no “expected” or “out of the box” with most things in Lucene — it’s a toolkit. But you know that. 🙂

@Flax

It was a long, long weekend 🙂

To all the Xapian folks out there – I know these benchmarks don’t really show off the power of your system (especially since it’s intentionally not tuned). Hopefully future evaluations will. I do want to say though that I was very impressed by the libraries and interfaces. It’s a beast.

@Otis

Good point! I was checking for “?” but didn’t filter for any URL’s first (most shortened URL’s shouldn’t have them though …). I just re-ran the stats to make sure and it returned 18.7%, which rounds up still to 19% (phew). Thanks.

Some thoughts on this type of stuff:

http://www.jroller.com/otis/entry/open_source_search_engine_benchmark

(note the bolded part)

@Otis

Thanks for the feedback!

I definitely see these benchmarks being incremental and I agree getting community support is key.

Never expected to get a lot traffic … If I knew I would have tried to test more modes of optimization and confer each implementation with the community (but that’s a LOT of work). I would have also put some ads up 😉 Fortunately, the code is open source so let’s continue to contribute to make these experiments bigger and better.

I updated my conclusion section last night so that readers understand that my experiments aim to demonstrate more “out of the box” effectiveness (which I think is very important).

Best

Hey Vik, bravo for taking all these comments as constructive criticism and not take them as offensive and take the defensive stand. Well done!

Thanks Vik for this very well written and detailed post on open source search solutions

At our start-up we’re observing similar performance stats between Lucene and Sphinx on our data as well. I learned a lot from your experimental setup like for ex. we hadn’t heard of DCG before, which looks like a great metric for search ranking. We can all benefit from more benchmarks like this and we’ll be following your open source project very closely.

I’m glad you’ve got the ball rolling on open-source search evaluation for SIGIR. Just getting them all running against the same data is a good start. And I agree, a comparison is more revealing than stand-alone evaluations.

Using Twitters and medical abstracts (intensively hand-crafted) seems a bit limited, I’d like to see a more heterogeneous corpus, including long html, ugly html, office documents, etc. My default for gathering this kind of thing is the US Federal government, which has no copyright as such. In addition to out-of-the-box, it would be very interesting to see comparisons for lightly-tuned search engines, maybe no more than 20 or 30 configuration line changes.

Could you explain a little why you focused so significantly on index size? I’ve found that it’s much better to take more room for an enriched index, with gentle stemming, including stopwords, alternates for accented characters and ambiguous-term punctuation, position and field metadata, etc.

Someone on this thread pointed out the importance of adds, deletes and updates, and that’s where the indexing speed and disc footprint might be more of a significant issue.

As Otis says, thank you for taking the comments in the spirit offered, and I hope you continue to post about search evaluation.

PS I had a better comment but the browser ate this. If you see two, please delete one.

PS: Solr doesn’t come with a crawler, it can’t even read HTML files properly. There is good documentation for connecting Nutch to Solr though: http://www.lucidimagination.com/blog/2009/03/09/nutch-solr/

@Avi

Yes, can’t forget Nutch. Updated. Thanks!

My fav is Lucene. Anyways I created some Pocket God forums at http://www.pocketgodforums.tk . So if anyone wishes to join them go right ahead!

http://www.dataparksearch.org/ – one more open source search engine, if you would test more of them.

http://www.lemurproject.org/ and

http://www.austlii.edu.au/techlib/software/sino/

are also on my list. I’ve used the latter, Sino, to index documents in my Linux file system. Although I once compiled Lemur/Indri, I didn’t have time to come to terms with the query language, which differs markedly from the boolean/proximity operators usually supported by information retrieval systems.

@Avi

Totally agreed – bigger, diverse data sets should be tested as well. This was just a fun experiment around data I had readily available.

Didn’t mean to focus a lot on index size but it is an important feature to keep in mind especially at scale. Also, smaller indexes may help to explain on disk query speed and indexing time.

Just wanted to say thanks for doing this with the twitter data set. I have been working with data sets that average no more than 20 words for years using commercial databases. It seems most people ignore this need for text search on small records (but 130M+ records).

Great post Vik.

It would be really interesting to also compare:

1/ Ability to perform real-time updates. This is especially relevant if you are considering a twitter-eque dataset in which relevance is heavily influenced by recency.

2/ Ability to perform parametrized/faceted queries.

3/ Actual distributed nature of the system.

@Aditya

Thanks for commenting!

Totally.

I was actually originally planning to just perf real-time tweets against these platforms, but then when I dived into it I realized some of these didn’t really support incremental indexing very well (let alone in-memory near real-time indexing), so I got swayed to doing a more general test (as I wanted code examples for each platform for the talk). It would definitely make for a very interesting evaluation I think.

I am sorry, but Vik’s comparison of OSS engines would not be presented at SIGIR. It misses almost everything a good research would have. The numbers are misleading (except of “Relevancy” column); the performance differences are not commented …or rather… cannot be explained by the author, because he does not know technological background of the tested systems, etc etc.

Sorry for this negative feedback. When I see “out of the box tests”, I read it as: “I have not enough time to understand the tested projects, I just need SOME numbers”. It is good for a blogpost, but for an academic conference?

@bob

I completely agree this is not in the form for an academic conference/publication (this is just a blog post). I did these tests primarily to generate code examples and howtos for some of the popular platforms which is what I plan to present – not these results. I did these more out of curiosity+fun and to hopefully spur more research in this space as it takes a lot of work and collaboration to nail good benchmarks/explanations for multiple platforms.

Happy to admit I didn’t have the time to completely understand all the inner workings of all the platforms used in these experiments (some of the documentation was quite incomplete on this as well), but only had a weekend budget and felt that these preliminary results were interesting enough to get the ball going. Again, this is just a blog post to start the conversation and I tried my best to make that clear in my writeup.

I hope this post encourages academia and the community to make even better benchmarks (I open sourced the code). And on a side note – I really hope the great ideas in academia can surface more informally through blogs as well to increase accessibility.

Cheers

nice post vik. open sourcing your benchmarks is a fantastic idea and i hope some of the people here who are commenting can also walk the walk and contribute 🙂 if you have an idea on how this can be done better then just do it! thi must have taken a ton of work so bravo to u. optimizing every solution could surely use community help & every benchmark, especially the first ones in a field, will probably never satisfy everyone. i thought you were clear this was for fun and just a start, and i appreciate you taking the chance to make your methodologies and results available bc we need more of that

Wonder why Sphinx “scale” is claimed to be in 100 GB range. The biggest known installation indexes around 2 TB. Not quite 0.1 TB that you claim…

I also wonder what OS did you use and what ranking mode did you use for Sphinx (the latter vary a lot in both performance and relevance), but that should be possible to peak in the code I suppose.

BTW, 100GB limit for Sphinx is stated in the Sphinx Documentation: http://www.sphinxsearch.com/docs/current.html#features

Maxime, did you notice “on a single CPU” part?

I admit that “100 GB on a single CPU” is pretty confusing though and needs to be changed.

What it was *supposed* to mean is that I’ve seen production installations running 100+ GB indexes on a single CPU and HDD, *and* they were practically usable. (Average query time was in seconds; not super-quick, but still OK for some cases.)

Bad wording on my part indeed, but it still amazes me how people tend to deduce “Sphinx will break beyond all repair at 101 GB boundary” anyway.

A few words on relevance…

According to http://trec.nist.gov/data/filtering/README.t9.filtering, “the list of documents explicitly judged to be not relevant is not provided here”

So of 630 results, only 54 results found by Sphinx and 90 results found by Lucene actually get any attached assessed scores. Not a single result set is fully assessed. Only 17 Sphinx results set and 22 Lucene result sets have *any* non-zero assessments.

And scorer.py accounts for unassessed matches as explicitly irrelevant. Bummer.

@Andrew

Thanks for commenting!

I agree re: the scale. I tried to clarify in my comment that it doesn’t assume distributed setups which I’m sure can handle terabytes.

For ranking, the TREC 9 data set is not the best by any means. Unfortunately, it’s the only set I could find that was publicly available that didn’t cost money or require mailing CD’s (which is quite unfortunate and I’m going to look into seeing if I can help change that).

Your relevance stats are spot on with what I found as well. Retrieving judged relevant results doesn’t happen often with this “converted” data set (these tasks don’t make the perfect queries either!), but I think this behavior somewhat normalizes across the board so the relative scores can still provide value. In scorer.py I treat results not labeled for a query as a 0 score (like a non-relevant assumption). This is not necessarily the best assumption to make without judgment support, but I think it’s an ok fair metric as it will boost result sets that include assessed data (in weighted rank order) where assessed data corresponds to only ‘somewhat relevant or relevant’ matches (so i grant that I’m assuming the best results for that task are most likely contained in the judged set). This strategy seemed to delineate the scores better (and I believe the relative ranking was still consistent with what scorer returns when it provides a 1 score for an unassessed match – which effectively focuses on just matching results that score level 2 i.e. ‘definitely relevant’).

I was super impressed by the speed and ease of Sphinx. I can totally see why craigslist uses it esp. with MySQL.

Great work Vik.

It’s nice to have example code and scorers for some of the popular open source search engines in one place and I agree a community supported benchmark is the way to go.

@Vik

I can’t agree with you about the scoring metric being fair. Simply because it favors systems that finds more assessed matches instead of more *relevant* matches. With only 1.5% of the collection and under 15% of any result set assessed, that’s not quite the coverage I’d base my own opinions upon.

Also my numbers are slightly different. Specifically, I get

lucene-dcg = 1.061

sphinx-matchany-dcg = 0.626

sphinx-default-dcg = 0.882

sphinx-bm25-dcg = 0.895 (sic!)

Oh, and Sphinx with BM25 ranker (something that Lucene and other systems default to) is also 2-3x faster. Guess we should had made it the default one. Would definitely look better on this kind of benchmarks.

Well, too bad we’re defaulting to something more complicated than BM25 ranking.

Though anyway it’s funny how you chose non-default match_any ranker which was pretty much the worst possible choice for your particular tests.

I’ve a number of other interesting findings but these blog comments don’t seem the right place for them. I should write a followup. Or several.

Last but not least I should thank you for both your blog post and sharing the code.

Even though I do find some of the choices and results, uh, pretty strange, on the other hand that’s exactly the reason I now have the itch to finally wrap up and publish my own results. 😉

@Andrew

Your comment got spam filtered. I usually never check but fortunately saw this and recovered it.

I updated my earlier comment about the fairness. I assume the assessed data will most likely include the best results (as multiple editors redundantly found these results to be relevant via search) so matching more with this set is ideal. That might be a bad assumption, but otherwise this data would be completely useless 🙂 Regardless, this data set isn’t very good and others need to be tried.

I think I tried matchany because these queries on average are long and are not guaranteed to all be contained in any document (as I mentioned these tasks don’t make perfect queries) but forgot to set it back to the defaults. However, I agree with you that it’s more out of the box to not set this as you mention (or SetWeights – I think I stole that from the Java example in the sphinx api dir).

SphinxClient cl = new SphinxClient();

cl.SetServer (“localhost”, 8002);

//cl.SetWeights (new int[] {100, 1});

//cl.SetMatchMode (SphinxClient.SPH_MATCH_ANY);

cl.SetLimits (0, 10);

cl.SetSortMode (SphinxClient.SPH_SORT_RELEVANCE, “”);

When I re-run Sphinx Search with those two lines commented out I get:

Avg. DCG 0.769012899762

Which is much better, although the relative ranking remains the same

Our scores don’t match up though oddly (even for Lucene) – and I just re-ran them all to double check. Either our scorer or Search.java’s are differing. I’ll check up on this when I get more time and update the code/chart appropriately.

Also, using something more complicated than BM25 doesn’t always make it a better choice 🙂 Of course, basing the default on how well it does on this data set isn’t probably a good idea either 🙂

I’m with you – shouldn’t make final opinions on which solution to use based on these experiments. Always run your own benchmark.

Looking forward to reading your comments and benchmarks (esp. from a real Sphinx expert) and appreciate the feedback. Let’s make these experiments better!

Cheers

Vik, thanks for this post. Never mind angry voices, it’s always been a domain for religious wars.

I updated the charts to reflect Sphinx’s new perfs based on a closer ‘out of the box’ setup. Still need to double check it but thought I’d put up my latest for now.

Nice that you started benchmarking OSS indexers. There is a need for that and it’s like you gave the initial impulse.

As it’s the beginning, maybe the method has to be improved and a better dataset(s) has to be selected, but now you’re getting feedback from specialists of the different indexers. That’s pretty good!

Maybe they can agree on defining and refining a standard benchmarking method as well as a data set (and the hardware test platform), and then come with different tuning for the different indexers.

Friendly competition à la “top500” would benefit everybody…

E.

great post vik!

I found your article and comments to be very insightful and a net positive for the search world. I echo eviosard more open source competition the better. Thanks for taking charge

Hi all,

I thought some of you might be interested to know we’re sponsoring a one-day mini conference on open source search: http://searchevent.org/

to be held on Sep 29th in Cambridge UK. Would be great if some of you could make it!

Charlie

wow.. great comparison. What about backlink crawl?

Eye opening, especially for Luciene users.

We just integrated Heritrix with Solr using Java middleware, calling it YouSeer. The code is on SourceForge. We think this will compete with any of the open source search engines, such as Nutch and Hounder. We would appreciate any comments.

Best

Lee Giles

good to see such comparison and rich discussion at one single place. Vik appreciate your entreprenuership.

Testing-Associates provides independent verification and validation services for your software release and software acquisitions requirements. Contact us at info@testing-associates.com or +91-9481482882 / +1-(415)-944-1435 / +44 – (208)-196-6233

Professional software testing services

Has anyone come across new benchmarking for some of the altsearch engines out there…such as http://www.yebol.com?

Vik, the results are very interesting. Anyway, what kind of the hardware platform did you use for benchmarks?

Curious why you did not just work with Solr to begin with? Seems easier http://www.lucidimagination.com/Downloads/

Vik,

thanks for this article. I am looking for full-text search engine for a project that will have 50M+ rows of short text. Therefore I am interested in your Twitter experiment. Two questions:

1) How did you store the tweets? Was it 1M files? Or can I put all tweets in one or couple csv files and than use lucene/sphinx on it?

2) Did you compare search speeds and what box did you use for testing?

Thanks!

Hi James – I stored all the tweets in one file (a tweet per line). If you check out the github code link at the end of the post you’ll find the code that reads each line into the indexer.

Best,

— Vik

Vik, thanks for your reply. I have already checked that link, for sources but it looks there is only ohsumed folder from your Medical Data Set but not the Twitter one. I would really appreciate a chance to look how it worked for short texts, could you please make it available? Thanks

Hi Vik, thanks for your reply.

I have already checked that link, but only found the sources from your medical text experiment, but not the Twitter one. Could you please make those from twitter available? Cheers!

Vik, could you please check if the Twitter experiment code is still there? I could only find the med one. Thanks!

@James – Sorry this was flagged as spam for some reason. You’re right the Twitter code isn’t there … it’s been a while since I ran that experiment but if I find the code I’ll post it there.

— Vik

Hi there.

Quite exciting!

Any idea of an OS engine being used in any project on any broad public governmental web site with laws or and governmental requlations and governmental administrative decisions?

Thanks in advance

Paris

Hi Vik,

Thanks for a really interesting article. I have one question regarding the relevancy scores for the medical test. You say you calculated average DCG, and I guess you are talking about normalized DCG. However, as far as I have understood, normalized DCG should return a top score of 1.0, but Lucin got a score of 1.0499. Could you comment on this?

Also, it would be interesting to know something (cpu/memory) about the hardware you used for the test.

Best regards,

Arne

Hi Arne –

When I ran this test I computed DCG for the first ten results, not nDCG (which takes the DCG score and divides it by the best possible DCG for that result set).

I ran these tests on a Macbook Pro 2007 model with 4GB of ram.

Keep in mind that these tests are a bit old and weren’t perfect (did them on a weekend day, a better evaluation set would help greatly, etc.) so take it with a grain of salt.

Whenever cycles spare I’ll try to take another stab at this benchmark with the latest versions of these platforms. Love to try it again esp. with all the new knowledge and use cases I’ve picked up since then.

— Vik

Hi Vik,

Thanks for your reply!

Just a question regarding the trec-9 data; you mention 196403 references, but I cound not get this number to match with the data. Did you use the data from trec9-train (ohsumed.87, 54715 articles), or the data from trec9-test (ohsumed.88-91, 293882 articles)?

Best regards,

Arne

Hi Arne –

My memory is a little foggy on how I got that number but based on the code I checked-in on github looks like the data source was 88-91.

If you’re looking to do search performance / evaluation I would highly recommend using a real search TREC data set as ohsumed is quite old and I think it was more tailored for collaborative filtering evaluation (it was the only data set I could find online at the time though).

Best,

— Vik

Good…

what kind of the hardware platform did you use for benchmarks?

we test it don’t have this speed

There is another brand new greate open source search engine FASTCAT!!

It’s fast, reliable and java-based.

It you have a jdk1.5+ in your environment, you can start a search server by a just one click.

Check it out!

http://code.google.com/p/google-fastcat/

where the fastcat’s code ?

Hi Vik

Where can I find information, eg a step by step guide or manual on how to evaluate Lucene on a trec collection and how to input the result file from Lucene to trec_eval to be able to find out recall, precision, map et.

Best regards Sid

Hi Vik,

I am looking for a search engine that would support multiple index and data files (up to 3000). That would allow me to update the data and index just by replacing one data/index pair file instead of messing with deleting/inserting records into one common data/index file. Does a search engine like that exist? Would search over 3000 index files make it much slower than searching just one, so it would not make sense at all? My dataset is similar to your twitter experiment – millions of very short text lines, all searchable and they will be only updated in blocks by completely replacing old block with new data. Thus my question about multiple index files. Thank you

Nothing wrong with having multiple search indexes, although 3000 for a single application sounds quite high and I would recommend merging them. You could write a class that wraps multiple index instances and from there expose search functionality that multi-threads the searches across all the indexes.

Your Xapian index, is that compacted?

Don’t think so … you can find the source code and commands I ran for building the Xapian index here:

https://github.com/zooie/opensearch/tree/master/exp/software/ohsumed/xapian

I am looking for a way to consolidate the ranking scores between the textual indexers and a custom ranker that my company have. Some example way to achieve this include:

– The textual indexer search within a explicitly given list of documents, or give the ranking score for each document.

– Alternatively, it will also work for me if I can plug in my custom ranker into the textual indexer to provide custom rank scrores.

Can you kindly give some advices about how to achieve this?

this is an interesting comparition, I’ve been loocking for some sources for evaluating an IRS, but I’ve not found anything yet, I’m working with Lucene and I think it is amazing……If any of you guys can help me , it would be very helpfull. I’m from Cuba , and I’m just learning about IR

thanks Slash

Hi ,

Can you guys give me a comment on this Vertical Search Engine comparison.

This is one of my products.

Hybrid Search results gives you the search results from top search engines like Google, Yahoo, Bing

http://www.elocalfinder.com/SearchEngineComparison/

Interesting research! We are looking to implement sphinx for our website search engine. I am not a developer so I wanted to get your expert opinion on how much effort it would take to implement. The website is written in php and we want the search engine to search through 7000 materials that have title, description and keyword field that we want the search engine to search through.

Hey

Take a look on this Meta Search Engine comparison.

http://elocalfinder.com/HSearch.aspx